MCP's New Transport Layer - A Deep Dive into the Streamable HTTP Protocol

Yang Ming

Model Context Protocol (MCP) is a standard protocol for communication between AI models and tools. As AI applications grow increasingly complex and widely deployed, the original communication mechanisms face a series of challenges. PR #206 on GitHub introduces a brand-new Streamable HTTP transport layer, representing a significant improvement over the original HTTP+SSE transport mechanism. This article provides a detailed analysis of the protocol's design philosophy, technical specifics, and practical applications.

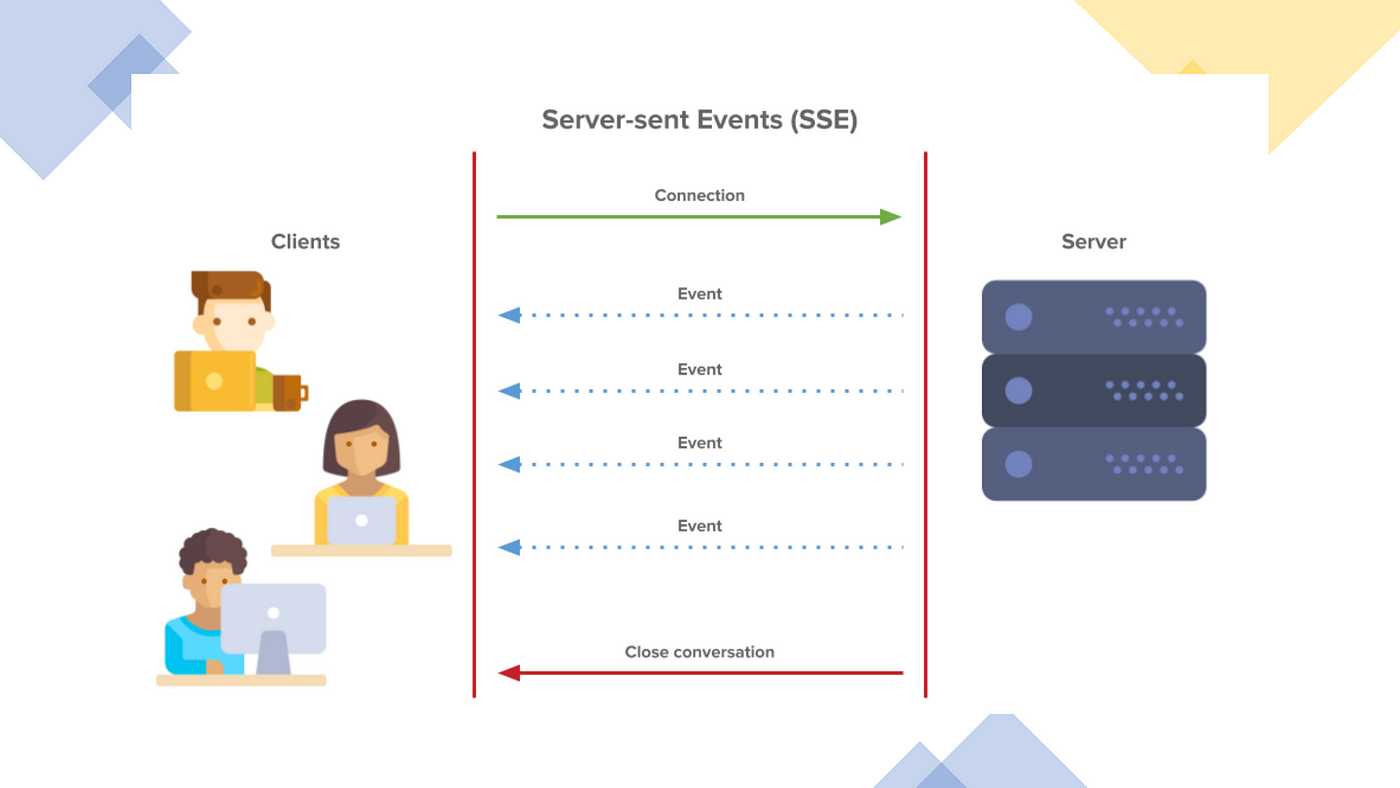

Original HTTP+SSE Transport Mechanism and Its Limitations

In the original MCP implementation, clients and servers communicate through two main channels:

- HTTP Request/Response: Clients send messages to the server via standard HTTP requests

- Server-Sent Events (SSE): The server pushes messages to clients through a dedicated

/sseendpoint

Key Issues

While this design is straightforward, it presents several critical problems:

No Support for Reconnection/Recovery

When an SSE connection drops, all session states are lost, forcing clients to re-establish the connection and reinitialize the entire session. For example, a large file analysis task in progress would be completely interrupted due to unstable WiFi, requiring users to restart the entire process.

Servers Must Maintain Long-Lived Connections

Servers must maintain long-lived SSE connections for each client, leading to significant resource consumption with high concurrency. When servers need to restart or scale, all connections are interrupted, impacting user experience and system reliability.

Server Messages Can Only Be Delivered via SSE

Even for simple request-response interactions, servers must return information through the SSE channel, creating unnecessary complexity and overhead. Certain environments (e.g., cloud functions) are unsuitable for maintaining long-lived SSE connections.

Infrastructure Compatibility Limitations

Many existing web infrastructures—such as CDNs, load balancers, and API gateways—may not properly handle long-lived SSE connections. Enterprise firewalls might forcibly terminate timed-out connections, resulting in unreliable service.

Streamable HTTP: Design and Principles

Streamable HTTP is built on the following core principles:

- Maximized Compatibility: Seamless integration with existing HTTP ecosystems

- Flexibility: Support for both stateless and stateful modes

- Resource Efficiency: On-demand resource allocation, avoiding unnecessary long-lived connections

- Reliability: Support for reconnection and session recovery

Key Improvements

Compared to the original mechanism, Streamable HTTP introduces several critical enhancements:

- Unified Endpoint: Removes the dedicated

/sseendpoint, consolidating all communication through a single endpoint (e.g.,/message) - On-Demand Streaming: Servers can flexibly choose between returning standard HTTP responses or upgrading to SSE streams

- Session Identification: Introduces a session ID mechanism to support state management and recovery

- Flexible Initialization: Clients can actively initialize SSE streams via empty GET requests

Technical Details

The workflow of Streamable HTTP is as follows:

-

Session Initialization:

- Clients send initialization requests to the

/messageendpoint - Servers may generate and return a session ID to the client

- The session ID is used to identify the session in subsequent requests

- Clients send initialization requests to the

-

Client-to-Server Communication:

- All messages are sent via HTTP POST requests to the

/messageendpoint - If a session ID exists, it is included in the request

- All messages are sent via HTTP POST requests to the

-

Server Response Methods:

- Standard Response: Returns a direct HTTP response, suitable for simple interactions

- Streaming Response: Upgrades the connection to SSE, sends a series of events, then closes

- Long-Lived Connection: Maintains an SSE connection for continuous event delivery

-

Active SSE Stream Establishment:

- Clients can send GET requests to the

/messageendpoint to actively establish SSE streams - Servers can push notifications or requests through this stream

- Clients can send GET requests to the

-

Connection Recovery:

- If a connection drops, clients can reconnect using the previous session ID

- Servers can restore session states and resume prior interactions

Practical Application Scenarios

Stateless Server Mode

Scenario: Simple tool API services, such as mathematical calculations or text processing.

Implementation:

Client Server

| |

|-- POST /message (calculation req) ->|

| |-- Executes calculation

|<------- HTTP 200 (result) ---------|

| |

Advantage: Minimal deployment, no state management required, suitable for serverless architectures and microservices.

Streaming Progress Feedback Mode

Scenario: Long-running tasks, such as large file processing or complex AI generation.

Implementation:

Client Server

| |

|-- POST /message (process req) ----->|

| |-- Starts processing task

|<------- HTTP 200 (SSE starts) -----|

| |

|<------- SSE: 10% progress ----------|

|<------- SSE: 30% progress ----------|

|<------- SSE: 70% progress ----------|

|<------- SSE: Complete + result -----|

| |

Advantage: Provides real-time feedback without requiring permanent connection states.

Complex AI Session Mode

Scenario: Multi-turn AI assistants requiring context maintenance.

Implementation:

Client Server

| |

|-- POST /message (initialize) ------>|

|<-- HTTP 200 (session ID: abc123) --|

| |

|-- GET /message (session ID: abc123)|

|<------- SSE stream established ----|

| |

|-- POST /message (Q1, abc123) ------>|

|<------- SSE: Thinking... ----------|

|<------- SSE: Answer 1 -------------|

| |

|-- POST /message (Q2, abc123) ------>|

|<------- SSE: Thinking... ----------|

|<------- SSE: Answer 2 -------------|

Advantage: Maintains session context, supports complex interactions, and enables horizontal scaling.

Disconnection Recovery Mode

Scenario: AI applications in unstable network environments.

Implementation:

Client Server

| |

|-- POST /message (initialize) ------>|

|<-- HTTP 200 (session ID: xyz789) --|

| |

|-- GET /message (session ID: xyz789)|

|<------- SSE stream established ----|

| |

|-- POST /message (long task, xyz789)|

|<------- SSE: 30% progress ----------|

| |

| [Network interruption] |

| |

|-- GET /message (session ID: xyz789)|

|<------- SSE stream re-established -|

|<------- SSE: 60% progress ----------|

|<------- SSE: Complete -------------|

Advantage: Improves reliability in weak network conditions, enhancing user experience.

Key Advantages of Streamable HTTP

Technical Advantages

- Simplified Implementation: Works on standard HTTP servers without special requirements

- Resource Efficiency: Allocates resources on demand, avoiding long-lived connections per client

- Infrastructure Compatibility: Works well with existing web infrastructures (CDNs, load balancers, API gateways)

- Horizontal Scalability: Supports routing requests to different server nodes via message buses

- Progressive Adoption: Service providers can choose implementation complexity based on needs

- Reconnection Support: Enables session recovery for improved reliability

Business Advantages

- Reduced Operational Costs: Lowers server resource consumption and simplifies deployment architecture

- Enhanced User Experience: Improves experience through real-time feedback and reliable connections

- Broad Applicability: Suitable for everything from simple tools to complex AI interactions

- Scalability: Supports more diverse AI application scenarios

- Developer-Friendly: Lowers the technical barrier to MCP implementation

Implementation Reference

Server-Side Implementation Highlights

-

Endpoint Design:

- Implement a single

/messageendpoint for all requests - Support both POST and GET HTTP methods

- Implement a single

-

State Management:

- Design session ID generation and validation mechanisms

- Implement session state storage (in-memory, Redis, etc.)

-

Request Handling:

- Parse session IDs from requests

- Determine response type (standard HTTP or SSE)

- Handle content types and formats for streaming responses

-

Connection Management:

- Implement SSE stream initialization and maintenance

- Handle disconnection and reconnection logic

Client-Side Implementation Highlights

-

Request Construction:

- Build messages compliant with the protocol

- Correctly include session IDs (if applicable)

-

Response Handling:

- Detect whether responses are standard HTTP or SSE

- Parse and process SSE events

-

Session Management:

- Store and manage session IDs

- Implement reconnection logic

-

Error Handling:

- Handle network errors and timeouts

- Implement exponential backoff retry strategies

Conclusion

The Streamable HTTP transport layer represents a significant evolution of the MCP protocol. By combining the strengths of HTTP and SSE while overcoming their limitations, it provides a more flexible and reliable communication solution for AI applications. It not only addresses the issues of the original transport mechanism but also lays the groundwork for future, more complex AI interaction patterns.

This protocol's design embodies practicality, balancing technical sophistication with compatibility with existing web infrastructures. Its flexibility allows developers to choose the most suitable implementation for their needs, from simple stateless APIs to complex interactive AI applications.

With the merging of this PR, the MCP community's technical ecosystem will become even richer, lowering the barrier to adoption for more developers. In the near future, we expect to see widespread adoption of Streamable HTTP-based MCP implementations across various AI applications.